So far we've talked about setting up sources and sinks, shaping the data streams, referencing the data fields, and so on. Within this Pipe framework, Operations are used to act upon the data - e.g., alter it, filter it, analyze it, or transform it. You can use the standard Operations in the Cascading library to create powerful and robust applications by combining them in chains (much like Unix operations such as sed, grep, sort, uniq, and awk). And if you want to go further, it's also very simple to develop custom Operations in Cascading.

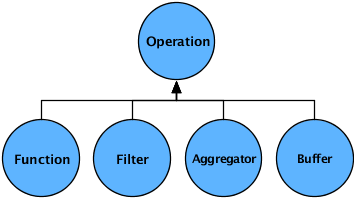

There are four kinds of Operations:

Function, Filter,

Aggregator, and

Buffer.

Operations typically require an input argument Tuple to act on.

And all Operations can return zero or more Tuple object results - except

Filter, which simply returns a Boolean indicating

whether to discard the current Tuple. A Function,

for instance, can parse a string passed by an argument Tuple and return

a new Tuple for every value parsed (i.e., one Tuple for each "word"), or

it may create a single Tuple with every parsed value included as an

element in one Tuple object (e.g., one Tuple with "first-name" and

"last-name" fields).

In theory, a Function can be used as a

Filter by not emitting a Tuple result. However,

the Filter type is optimized for filtering, and

can be combined with logical Operations such as

Not, And,

Or, etc.

During runtime, Operations actually receive arguments as one or

more instances of the TupleEntry object. The

TupleEntry object holds the current Tuple and a

Fields object that defines field names for

positions within the Tuple.

Except for Filter, all Operations must

declare result Fields, and if the actual output does not match the

declaration, the process will fail. For example, consider a

Function written to parse words out of a String

and return a new Tuple for each word. If it declares that its intended

output is a Tuple with a single field named "word", and then returns

more values in the Tuple beyond that single "word", processing will

halt. However, Operations designed to return arbitrary numbers of values

in a result Tuple may declare Fields.UNKNOWN.

The Cascading planner always attempts to "fail fast" where possible by checking the field name dependencies between Pipes and Operations, but there may be some cases the planner can't account for.

All Operations must be wrapped by either an

Each or an Every pipe

instance. The pipe is responsible for passing in an argument Tuple and

accepting the resulting output Tuple.

Operations by default are assumed by the Cascading planner to be

"safe". A safe Operation is idempotent; it can safely execute multiple

times on the exact same record or Tuple; it has no side-effects. If a

custom Operation is not idempotent, the method isSafe()

must return false. This value influences how the Cascading

planner renders the Flow under certain circumstances.